Squeeze theorem

|

|

In calculus, the squeeze theorem, (also known as the pinching theorem or sandwich theorem) is a theorem regarding the limit of a function. This theorem argues that if two functions approach the same limit at a point, and a third function "lies" between those functions; then, the third function also approaches that limit at that point. It was first used geometrically by the mathematicians Archimedes and Eudoxus in an effort to calculate pi. It was formulated in modern terms by Gauss.

If the functions f, g, and h are defined in an interval I containing a except possibly at a itself, and f(x) ≤ g(x) ≤ h(x) for every number x in I for which x ≠ a, and<math>\lim_{x \to a} f(x) = \lim_{x \to a} h(x) = L<math> <p> then <math>\lim_{x \to a} g(x) = L<math>. </blockquote>

Example

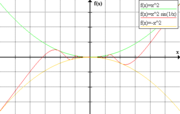

Consider g(x) = x2 sin 1/x.

Trying to calculate the limit of g as x → 0 is difficult by conventional means; substitution will fail since we have a 1/x in the function. Trying to use L'H˘pital's rule fails too; it does not remove the 1/x term. So we turn to using this result.

Let f(x) = -x2 and h(x) = x2. It can be shown that f(x) and h(x) constitute lower and upper bounds (respectively) to g(x) and thus satisfy f(x) ≤ g(x) ≤ h(x).

We trivially have (because f and h are polynomials)

- <math>\lim_{x \to 0} f(x) = \lim_{x \to 0} h(x) = 0<math>

Given these two conditions, the squeeze theorem states that

- <math>\lim_{x \to 0} g(x) = 0<math>

Proof of the squeeze theorem

It is given that

- <math>\lim_{x \to a} f(x) = \lim_{x \to a} h(x) = L<math>

so by the definition of the limit of a function at a point, for any ε > 0 there is a δ1 > 0 such that

- if 0 < |x - a| < δ1 then |f(x) - L| < ε

- if 0 < |x - a| < δ1 then -ε < f(x) - L < ε

- if 0 < |x - a| < δ1 then L - ε < f(x) < L + ε

and a δ2 > 0 such that for any ε > 0 there is a δ1 > 0 such that

- if 0 < |x - a| < δ2 then |h(x) - L| < ε and

- if 0 < |x - a| < δ2 then L - ε < h(x) < L + ε.

Then let δ equal the less of δ1 and δ2 (δ = min(δ1, δ2) ). From the previous statements it follows that

- if 0 < |x - a| < δ then L - ε < f(x) and

- if 0 < |x - a| < δ then h(x) < L + ε.

It is given that f(x) ≤ g(x) ≤ h(x), so

- if 0 < |x - a| < δ then L - ε < f(x) ≤ g(x) ≤ h(x) < L + ε.

- if 0 < |x - a| < δ then L - ε < g(x) < L + ε.

- if 0 < |x - a| < δ then -ε < g(x) - L < ε.

- if 0 < |x - a| < δ then |g(x) - L| < ε.

This fits the definition of a limit for the function g as x approaches a, so <math>\lim_{x \to a} g(x) = L<math>.

The sandwich theorem has no relation to the ham sandwich theorem.