Fitness landscape

|

|

In evolutionary biology, fitness landscapes or adaptive landscapes are used to visualize the relationship between genotypes (or phenotypes) and replicatory success. It is assumed that every genotype has a well defined replication rate (often referred to as fitness). The set of all possible genotypes and their related fitness values is then called a fitness landscape.

In evolutionary optimization problems, fitness landscapes are evaluations of a fitness function for all candidate solutions (see below).

The idea to study evolution by visualizing the distribution of fitness values as a kind of landscape was first introduced by Sewall Wright in 1932.

| Contents |

Fitness landscapes in biology

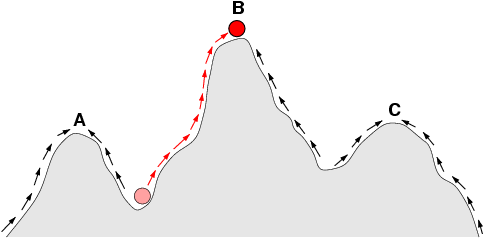

Fitness landscapes are often conceived of as ranges of mountains. There exist local peaks (points from which all paths are downhill, i.e. to lower fitness) and valleys (regions from which most paths lead uphill). A fitness landscape with many local peaks surrounded by deep valleys is called rugged. If all genotypes have the same replication rate, on the other hand, a fitness landscape is said to be flat.

An evolving population typically climbs uphill in the fitness landscape, until a local optimum is reached (Fig. 1). There it remains, unless a rare mutation opens a path to a new, higher fitness peak. Note, however, that at high mutation rates this picture is somewhat simplistic. A population may not be able to climb a very sharp peak if the mutation rate is too high, or it may drift away from a peak it had already found. The process of drifting away from a peak is often referred to as Muller's ratchet.

Fitness landscapes in evolutionary optimization

Apart from the field of evolutionary biology, the concept of a fitness landscape has also gained importance in evolutionary optimization methods such as genetic algorithms or evolutionary strategies. In evolutionary optimization, one tries to solve real-world problems (e.g., engineering or logistics problems) by imitating the dynamics of biological evolution. For example, a delivery truck with a number of destination addresses can take a large variety of different routes, but only very few will result in a short driving time. In order to use evolutionary optimization, one has to define for every possible solution s to the problem of interest (i.e., every possible route in the case of the delivery truck) how 'good' it is. This is done by introducing a scalar-valued function f(s) (scalar valued means that f(s) is a simple number, such as 0.3, while s can be a more complicated object, for example a list of destination addresses in the case of the delivery truck), which is called the fitness function or fitness landscape. A high f(s) implies that s is a good solution. In the case of the delivery truck, f(s) could be the number of deliveries per hour on route s. The best, or at least a very good, solution is then found in the following way. Initially, a population of random solutions is created. Then, the solutions are mutated and selected for those with higher fitness, until a satisfying solution has been found.

Evolutionary optimization techniques are particularly useful in situations in which it is easy to determine the quality of a single solution, but hard to go through all possible solutions one by one (it is easy to determine the driving time for a particular route of the delivery truck, but it is almost impossible to check all possible routes once the number of destinations grows to more than a handful).

The concept of a scalar valued fitness function f(s) also corresponds to the concept of a potential or energy function in physics. The two concepts only differ in so far that physicists traditionally think in terms of minimizing the potential function, while biologists prefer the notion that fitness is being maximized. Therefore, multiplying a potential function by -1 turns it into a fitness function, and vice versa.

Further reading

- Sewall Wright. "The roles of mutation, inbreeding, crossbreeding, and selection in evolution". In Proceedings of the Sixth International Congress on Genetics, pp. 355-366, 1932.

- Richard Dawkins. Climbing Mount Improbable. New York: Norton, 1996.

- Stuart Kauffman. At Home in the Universe: The Search for Laws of Self-Organization and Complexity. New York: Oxford University Press, 1995.

- Melanie Mitchell. An Introduction to Genetic Algorithms. Cambridge, MA: MIT Press, 1996.

- Foundations of Genetic Programming, Chapter 2 (http://www.cs.ucl.ac.uk/staff/W.Langdon/FOGP/intro_pic/landscape.html)

See also

| Topics in population genetics |

|---|

| Key concepts: Hardy-Weinberg law | Fisher's fundamental theorem | neutral theory |

| Selection: natural | sexual | artificial | ecological |

| Genetic drift: small population size | population bottleneck | founder effect |

| Founders: Ronald Fisher | J.B.S. Haldane | Sewall Wright |

| Related topics: evolution | microevolution | evolutionary game theory | fitness landscape |

| List of evolutionary biology topics |