Linear logic

|

|

In mathematical logic, linear logic is a type of substructural logic that denies the structural rules of weakening and contraction. The interpretation is of hypotheses as resources: every hypothesis must be consumed exactly once in a proof. This differs from usual logics such as classical or intuitionistic logic where the governing judgement is of truth, which may be freely used as many times as necessary. To give an example, from propositions A and A ⊃ B one may conclude A ∧ B as follows:

- Modus ponens (or implication elimination) on the assumptions A and A ⊃ B to conclude B.

- Conjunction of the assumption A and (1) to conclude A ∧ B.

This is often symbolically represented as a sequent: A, A ⊃ B ⊢ B. Both lines in the above proof "consume" the fact that A is true; this "freeness" of truth is usually what is desired in formal mathematics.

However, truth is often too abstract or unwieldy when applied to statements about the world. For example, suppose I have a quart of milk from which I can make a pound of butter. If I decide to make butter out of all of my milk, I cannot then conclude that I have both milk and butter! Yet, the logical schema outlined above lets me conclude that milk, milk ⊃ butter ⊢ milk ∧ butter (here, milk stands for the proposition "I have a quart of milk", etc.). The failure of ordinary logic to accurately model this activity is due to the nature of milk, butter, and resources in general: the quantity of resources is not a free fact to be used or disposed at will, like truth, but rather must be carefully accounted in every "state change". The accurate statement about my butter making activity is:

- From a quart of milk and a process to convert a quart of milk into a pound of butter, I obtain a pound of butter.

In linear logic this is written: milk, milk ⊸ butter ⊩ butter, using different connectives (⊸ instead of ⊃) and a different notion of logical entailment.

Linear logic was proposed by the French mathematician Jean-Yves Girard in 1987.

| Contents |

Linear connectives

The logical connectives are re-examined in this resource-interpretation; each connective splits into multiplicative and additive versions, which correspond to simultaneous and alternative presence, respectively. To motivate the connectives, let us use the example of a vending machine.

Multiplicative conjunction, also called tensor (written ⊗), denotes simultaneous occurrence of resources. For example, if I insert 50 cents into the vending machine, then the vending machine simultaneously has cent ⊗ cent ⊗ ⋯ ⊗ cent (50 of them). If I buy two sticks of gum, them I obtain gum ⊗ gum. If the machine had four sticks of gum (gum ⊗ gum ⊗ gum ⊗ gum) before, it has two now: gum ⊗ gum. The constant 1 is used to denote the absence of a resource; it functions as a unit of tensor: A ⊗ 1 ≡ 1 ⊗ A ≡ A.

Additive conjunction, also known as internal choice (written &) represents alternative occurrence of resources. If in the vending machine there is a packet of chips, a bar of candy, and a bottle of soft drink, all worth 50 cents each, then after inserting 50 cents I can get exactly one of these products. After the purchase, I have candy & chips & drink, i.e., exactly one of the conjuncts. I cannot use ⊗ for the outcome because I cannot get all of these products simultaneously with only 50 cents. Additive conjunction has a unit top (written ⊤, with A & ⊤ ≡ ⊤ & A ≡ A); it represents the nullary alternative. It is often used when the exact accounting of resources is burdensome or impossible. For example, if I don't actually care what I get from the machine, or indeed if I get anything at all, then the outcome as far as I am concerned is ⊤. This unit can be used together with ⊗ to define a minimal composition of resources: if I want a candy bar at least, but possibly something else also, then my desired outcome is candy ⊗ ⊤.

Additive disjunction, also known as external choice (written ⊕) represents an choice over which one has no control. For example, if the vending machine allows me to gamble (i.e., "insert 50 cents and win a candy bar, a soft drink, or an all expenses paid vacation to Hawaii") then the outcome of the purchase is candy ⊕ drink ⊕ vacation. I know that I shall get one of the choices, but I have no control over the result. Note the difference from internal choice: if I had candy & drink & vacation, then I could choose the vacation if I wasn't feeling particularly hungry or thirsty. The unit of ⊕ is written 0, and represents a lack of outcome or catastrophic failure — say the vending machine jams, or the world is destroyed by a passing comet.

Multiplicative disjunction, also called par (written ⅋) represents simultaneous goals that must be reached. It is somewhat harder to motivate using the vending machine metaphor. If there is a particular flavour of candy that I like very much, but I have forgotten its name, then I can buy multiple bars and try to search for my favourite. Each purchase consumes some of my money, but my only control over the outcome of this experiment is by altering my order of selection of the various candy bars. In this case the outcome is candy1 ⅋ ⋯ ⅋ candyn. The unit of ⅋ is bottom (written ⊥), and stands for the empty goal: imagine refusing to insert any money, or pulling the "coin return" lever without making a selection.

Linear implication. The conjunctions and disjunctions above define the state of the world, but the description is static. For state change, linear logic defines the connective of linear implication (written ⊸), sometimes also known as multimap or lolli because of its lollipop-like shape. The proposition A ⊸ B means: consume resource A to achieve resource B. For example, if I buy a candy bar with two quarters, then I can describe the change as quarter ⊗ quarter ⊸ candy. Note that the implication itself is a resource that must obey the principle of single consumption.

Exponential connectives. The collection of connectives so far are excellent for describing state and transitions, but they are too weak if one needs the usual notion of truth. This is obviously very desirable because a discussion about the actual world should not preclude standard mathematical reasoning. Linear logic uses an idea from modal logic to embed the usual logic by means of a pair of exponential operators.

- Re-use or copying is allowed for propositions using the "of course" exponential operator (written !). Logically, two occurrences of !A as hypotheses may be contracted into a single occurrence.

- The collection of goals is allowed to be extended with propositions using the "why not" operator (written ?). Logically, any fact can be weakened by including an additional conclusion ?A.

Under the resource interpretation, ! encodes arbitrary production and ? encodes arbitrary consumption.

Flavours of linear logic

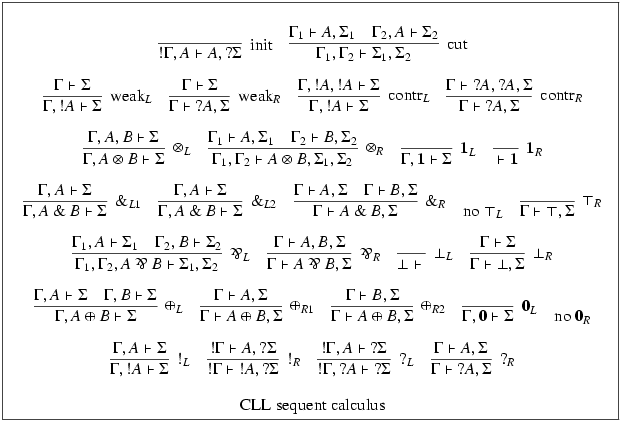

Linear logic has many restrictions and variants. The primary axis of variation is along the classical/intuitionistic divide. Classical linear logic (CLL) is the original linear logic as proposed by Girard. In CLL every connective has a dual. The following is a two-sided presentation of CLL as a sequent calculus:

Linear implication is definable in terms of linear negation and multiplicative disjunction in CLL: A ⊸ B ≡ ¬ A ⅋ B. This is familiar from other classical logics: for example, the usual implication ⊃ is similarly definable: A ⊃ B ≡ ¬ A ∨ B. Such definitions of course require a notion of negation, but in classical logic one can use duals: the dual of A, written A⊥ is defined as follows.

| (A ⊗ B)⊥ | = | A⊥ ⅋ B⊥ |

| (A & B)⊥ | = | A⊥ ⊕ B⊥ |

| (A ⊕ B)⊥ | = | A⊥ & B⊥ |

| (A ⅋ B)⊥ | = | A⊥ ⊗ B⊥ |

The logical units have similar duals; for example: ⊤⊥ = 0.

Intuitionistic linear logic (ILL) allows only a single conclusion. Unlike CLL, connectives in ILL do not have perfect duals. Indeed, the connectives par and question mark, and the propositional constant bottom (⊥), are absent in ILL because their introduction requires multiple conclusions. As a result, linear implication is a basic connective in ILL.

Other variants of linear logic variously allow or disallow certain connectives, giving rise to logics with varying complexity. The following are the most common variants.

- Multiplicative linear logic or MLL. This variant allows only the multiplicative connectives tensor and par (and their units). It is decidable, but the decision problem is NP complete.

- Multiplicative additive linear logic or MALL, which adds the additive connectives to MLL. This variant is also decidable with a PSPACE complete decision problem.

- Multiplicative exponential linear logic or MELL, which is MLL plus the exponential operators. The decision problem for MELL is currently open.

- Multiplicative additive exponential linear logic or MAELL, which has all the above connectives. This variant is undecidable.

There are also first- and higher-order extensions of linear logic, but their development is standard (See first-order logic and higher-order logic.)

The closest sub-structural cousins of linear logic are:

- Affine logic, which extends linear logic with the structural rule of weakening. The connectives one and top are indistinguishable in affine logic.

- Strict logic or relevant logic, which extends linear logic with the structural rule of contraction.

- Non-commutative logic or ordered logic which removes the structural rule of exchange from linear logic. Multiplicative conjunction divides further into a pair of fuses (left fuse and right fuse).

See also

External links

- Article on Linear logic (http://www.rep.routledge.com/article/X006) at the Routledge Encyclopedia of Philosophy

- Game Semantics or Linear Logic? (http://www.csc.villanova.edu/~japaridz/CL/gsoll.html)

- Patrick Lincoln (http://www.csl.sri.com/users/lincoln/)'s excellent Introduction to Linear Logic (http://www.csl.sri.com/~lincoln/papers/sigact92.ps) (Postscript)